Benchmarks have influenced artificial intelligence (AI) in defining research goals and allowed researchers to track progress toward those goals.

An important part of intelligence is perception, experiencing the world through the senses. It is becoming increasingly important in areas such as robotics, self-driving cars, personal assistants, and medical imaging that develop agents with a human-level perceptual understanding of the world.

Perceiver, Flamingo and BEiT-3 are some examples of multimodal models aiming to be more comprehensive models of perception. However, since no set benchmark was available, their assessments were based on several specialized datasets. These benchmarks include Kinetics for detecting video actions, an audio set for classifying audio events, MOT for object tracking, and VQA for answering image questions.

Many other perceptual benchmarks are also currently used in AI research. Although these benchmarks have enabled incredible advances in the design and development of AI model architectures and training methods, each focuses exclusively on a small subset of perception: visual question-and-answer tasks typically focus on a high-level semantic scene understanding. Object tracking tasks typically capture the appearance of individual objects at a lower level, e.g. B. color or texture. Image benchmarks do not include temporal aspects. And only a small number of benchmarks provide tasks across visual and auditory modalities.

New DeepMind research has produced a collection of real-world event movies, specifically constructed and labeled according to six different types of tasks to address many of these issues. They are:

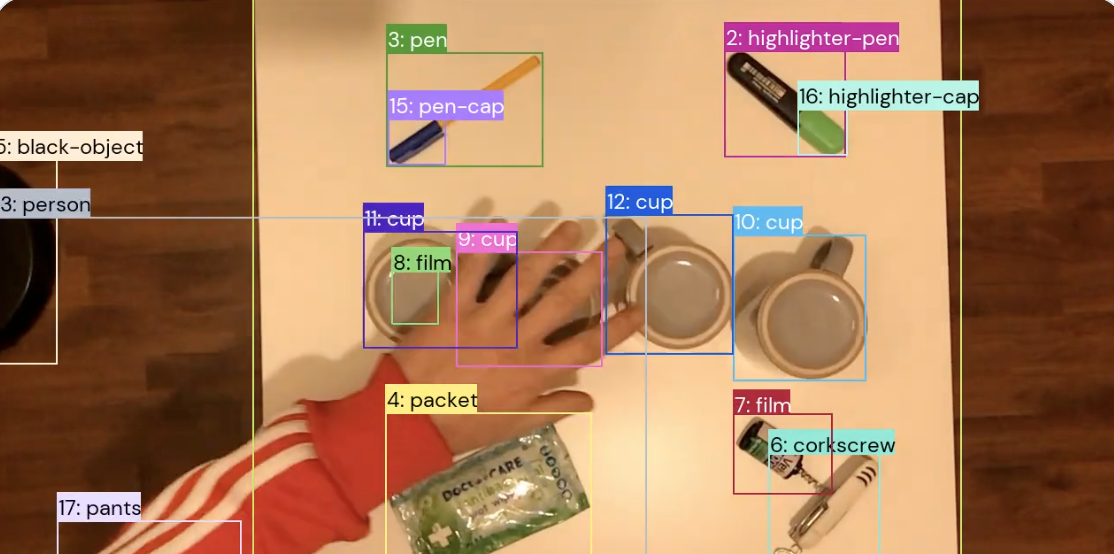

- Item tracking: At the beginning of the film, a frame is drawn around an object, and the model must return a full track throughout the film.

- Localization of temporal actions: The model must categorize and locate a given set of actions in time.

- Temporal sound localization: The model needs to temporally locate and categorize a range of sounds.

- Multiple Choice Video Question Answer consists of text questions about the video, each with three possible answers.

- Answering text questions about the video Using a model that must return one or more object traces is known as a Grounded Video Question response.

To create a balanced dataset, the researchers used datasets such as CATER and CLEVRER and created 37 videoscripts with different permutations. The videos show simple games or everyday tasks and allow them to specify the tasks that require knowledge of semantics, physical understanding, temporal reasoning or memory and abstraction skills.

The model developers can use the tiny fine-tuning set (20%) in the perception test to explain the nature of the tasks to the models. The remaining data (80%) consists of a sustained test split, where performance can only be evaluated through our ratings server, and a public validation split.

The researchers test their work in the six arithmetic tasks, and the evaluation results are comprehensive in many aspects. For a more thorough investigation, they also mapped out questions about different situations presented in the videos and different types of reasoning required to answer the questions for the visual question-and-answer activities.

When creating the benchmark, it was important to ensure that the participants and the scenes in the videos were diverse. To achieve this, they selected volunteers from multiple nations representing different races and ethnic groups and genders to have a different portrayal in each type of video script.

The perceptual test is intended to stimulate and guide future investigation of comprehensive perceptual models. In the future, they hope to collaborate with the multimodal research community to add more measures, tasks, annotations or even languages to the benchmark.

This Article is written as a research summary article by Marktechpost Staff based on the research paper 'Perception Test: A Diagnostic Benchmark for Multimodal Models'. All Credit For This Research Goes To Researchers on This Project. Check out the paper, github link and reference article. Please Don't Forget To Join Our ML Subreddit

![]()

Tanushree Shenwai is a Consulting Intern at MarktechPost. She is currently pursuing her B.Tech from Indian Institute of Technology (IIT), Bhubaneswar. She is a data science enthusiast and is very interested in the application areas of artificial intelligence in various fields. She is passionate about exploring new technological advances and their application in real life.

#DeepMind #introduces #Perceptual #Test #multimodal #benchmark #realworld #video #assess #perceptual #abilities #machine #learning #model

Leave a Comment