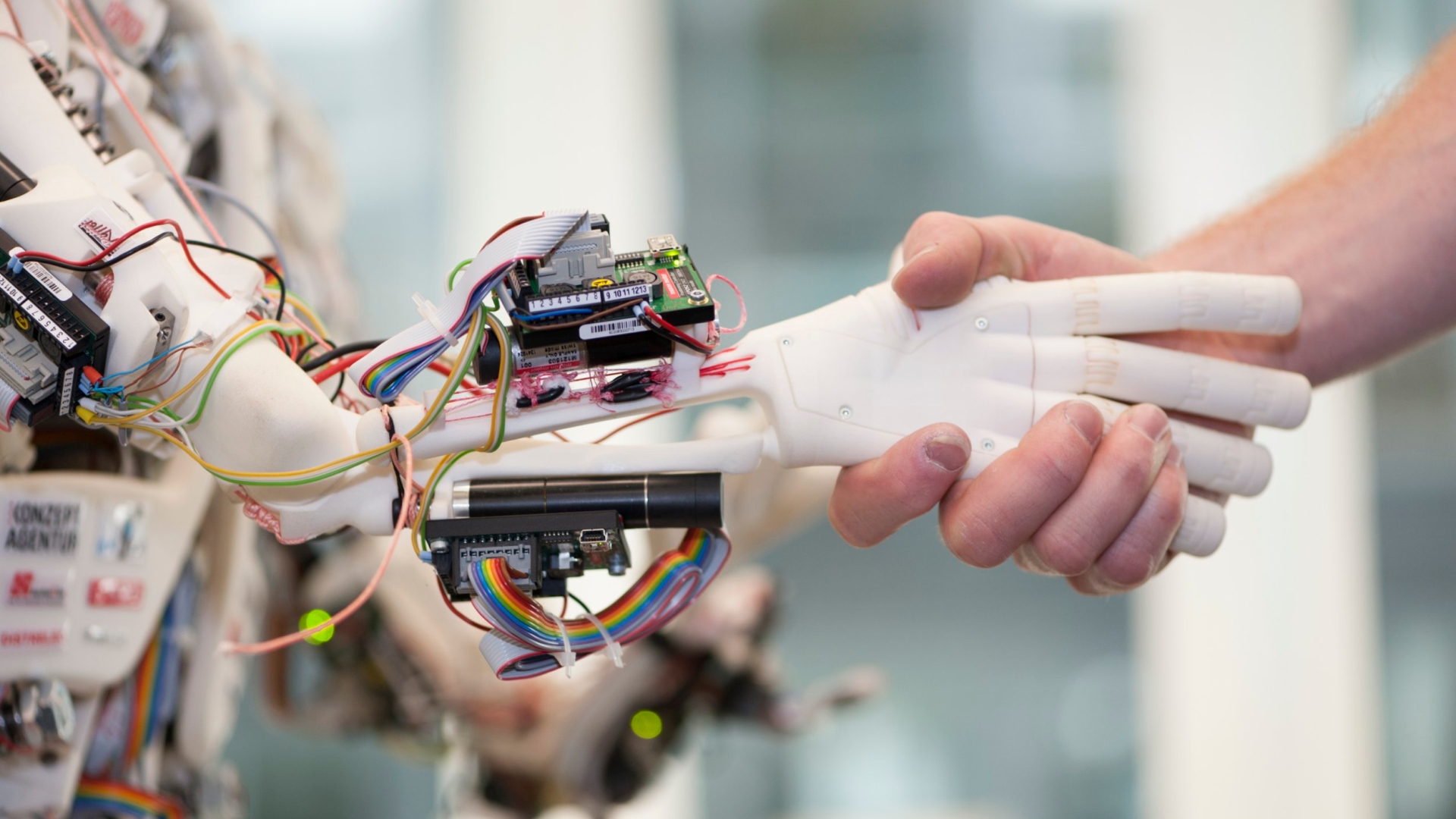

FOR artificial intelligence researchers, the limit of AI lies in creating a computer that is smarter than any human.

However, scientists have expressed concern that if such an invention becomes a reality, it will not be under the control of its human creators.

1

A study published in the Journal of Artificial Intelligence Research concludes that artificial superintelligence would be unsustainable due to humans’ inability to comprehend its power.

The levels of AI

Artificial intelligence is divided into three categories.

The lowest level of AI is Artificial Narrow Intelligence (ANI), an AI program that is exceptional in one respect.

For example, an AI bot programmed to play chess is unrivaled in the game but unable to perform other tasks.

Artificial General Intelligence (AGI), the next level of AI, is a computer that is just as intelligent as the average human.

But the final tier of AI is artificial superintelligence (ASI), an AI bot vastly smarter than any human and any human that has ever lived — researchers are pessimistic about humans’ ability to control an ASI , if it was ever created.

understand superintelligence

Nick Bostrom, Oxford scholar and one of the leading minds on AI, explains the extent of intelligence in his book Superintelligence.

Bostrom compares the intellect of the “village idiot” to the mind of Albert Einstein.

At first glance, it seems like there are big differences between the two.

But on a scale that takes into account all consciousnesses, the village idiot and Einstein are actually quite close – both are significantly smarter than all animals, fish and insects.

An artificially super-intelligent system that goes significantly Above Einstein’s intellect could be as incomprehensible to humans as we are to a beetle.

The study explains that because of this, we could never understand the motivations, intentions, or will of an artificially superintelligent computer — and with this cognitive divide, any hope of containing ASI is dashed.

“Superintelligence is multifaceted and therefore may be able to mobilize a variety of resources to achieve goals that humans may not be able to understand or even control,” the study authors write.

Even when people are given a benign task, they don’t know how the ASI will interpret or perform it.

“As an illustrative example, a superintelligence tasked with maximizing happiness in the world without deviating from its goal might find it more efficient to destroy all life on earth and faster to create computerized simulations of happy thoughts,” the researchers said study continued.

apocalypse ASI

AI researchers and technology executives like Elon Musk are openly concerned about human extinction caused by ASI.

The introduction of a new higher form of consciousness has typically spelled trouble for the less intelligent beings below.

For example antique homo sapiens once shared the earth with eight other types of human species.

The Conversation reported that less intelligent bipeds were wiped out homo sapiens‘ Resource consolidation, climate impacts and even war or violence.

Israeli historian and history professor at the Hebrew University of Jerusalem, Yuval Noah Harari, put it succinctly in his book Homo Deus: A Brief History of Tomorrow:

“You want to know how super-intelligent cyborgs treat ordinary flesh-and-blood humans? Better start studying how humans treat their less intelligent animal relatives.”

There is much debate as to when ASI will be obtained.

Ray Kurzweil, a respected computer scientist in the field of AI, estimates it will land in the year 2045 – only 23 years away and within the lifetime of many people currently living on Earth.

#Experts #warn #superintelligent #impossible #humans #control

Leave a Comment